Experiment with an AI application locally using Podman AI Lab

Prerequisites

This lab requires Podman Desktop with the Podman AI Lab extension enabled.

-

Podman Desktop: Follow the official documentation: Podman Download

-

Podman AI Lab: Follow the installation guide: Podman AI Lab extension

Introduction

In this lab, you will experiment with AI applications using Podman AI Lab. You will explore various AI applications and integrations, including Llama Stack.

Scenario

You are part of the Python AI Development team. Your responsibility is to experiment with AI applications using Podman AI Lab before building an AI agent in an enterprise environment.

Set up and explore AI tools

Select an AI Model

-

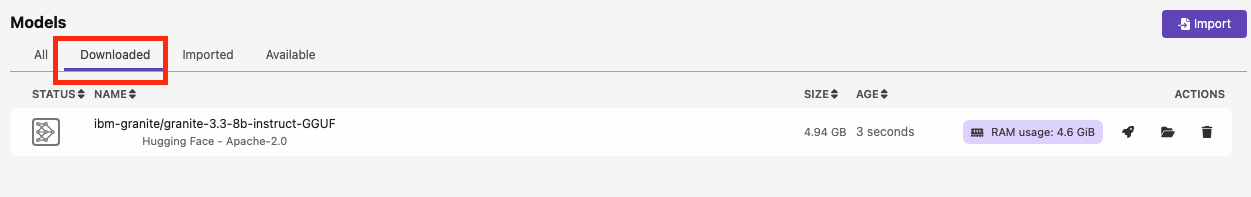

Click Models → Catalog

-

Select and download the model: ibm-granite/granite-3.3-8b-instruct-GGUF

-

Once finished, check the Downloads tab.

Now you can create a service to allow applications to consume the model easily.

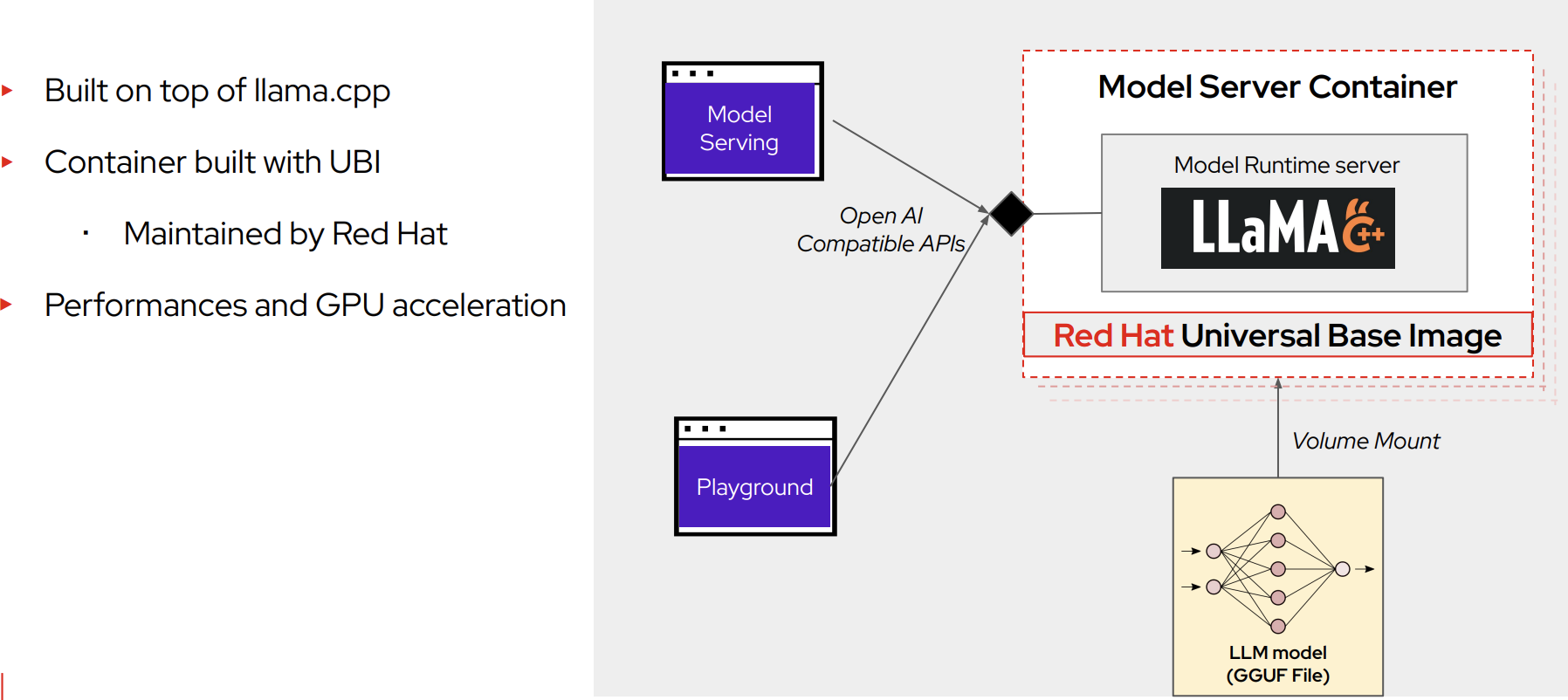

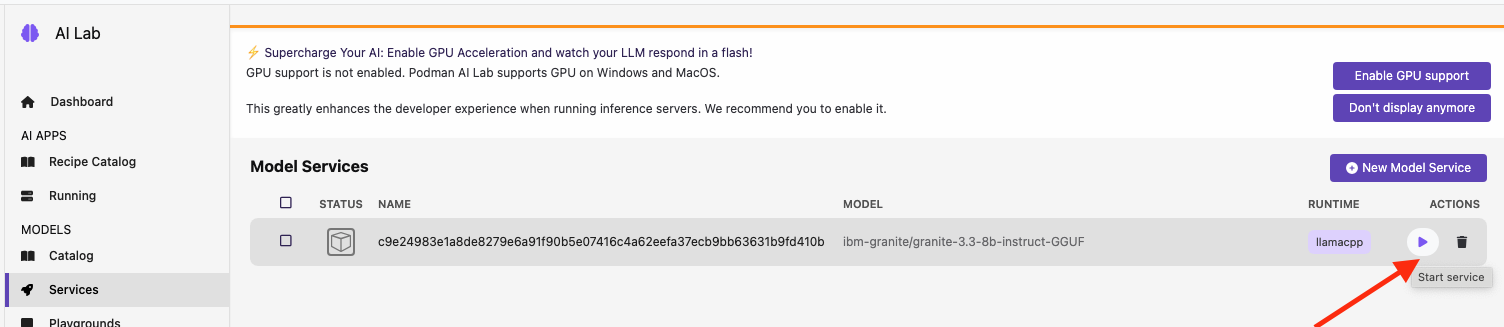

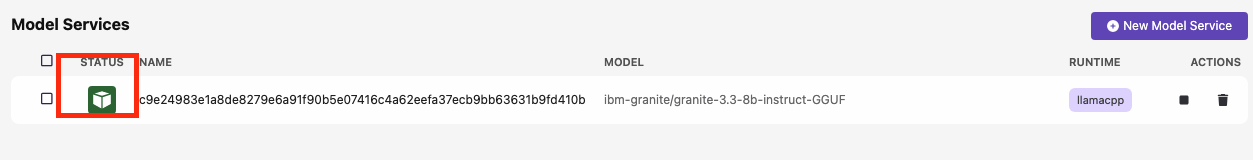

Create a model service

Podman AI Lab allows you to create model services and playgrounds to build AI applications. The model service is used for inference, allowing AI applications to consume it via HTTP.

-

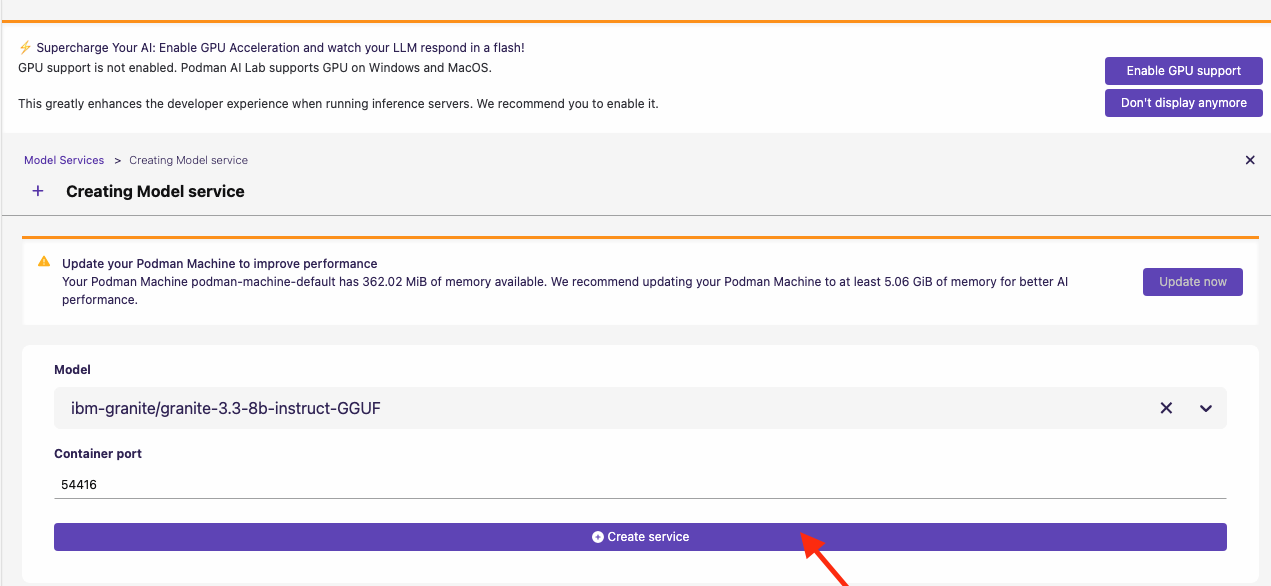

Click Models → Services

-

Click New Model Service

-

Review the information, then click Create Service

-

When the model service is ready, click the start icon.

-

The service is now started and ready to be consumed:

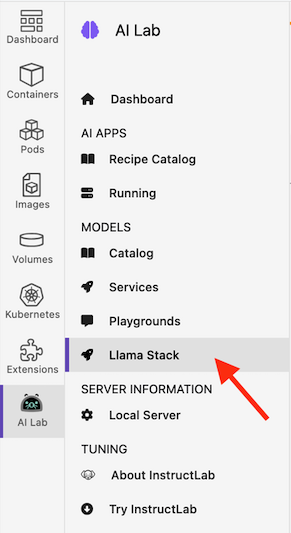

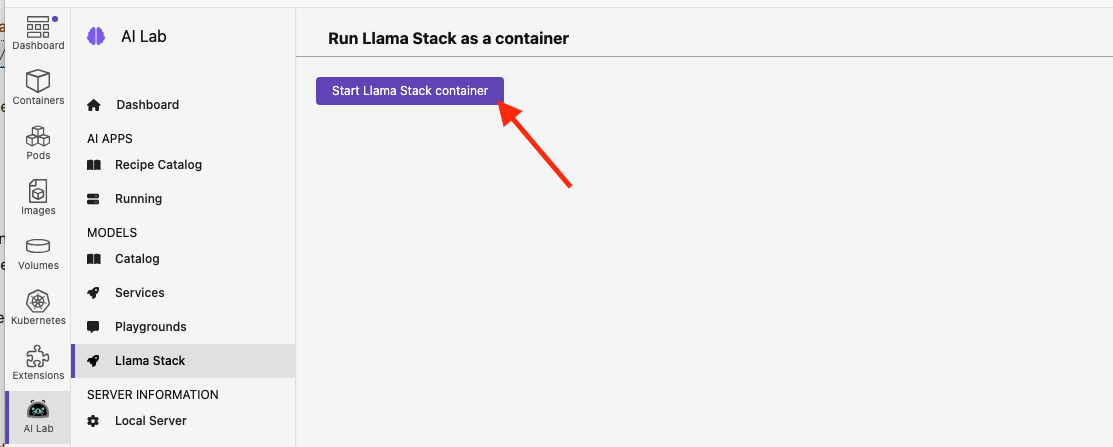

Explore LLama Stack

-

Select Llama Stack from Podman AI Lab

-

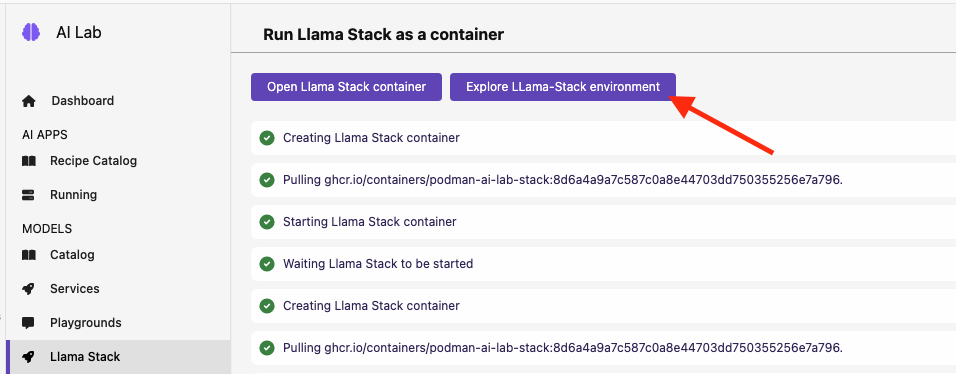

Select Start Llama Stack container:

-

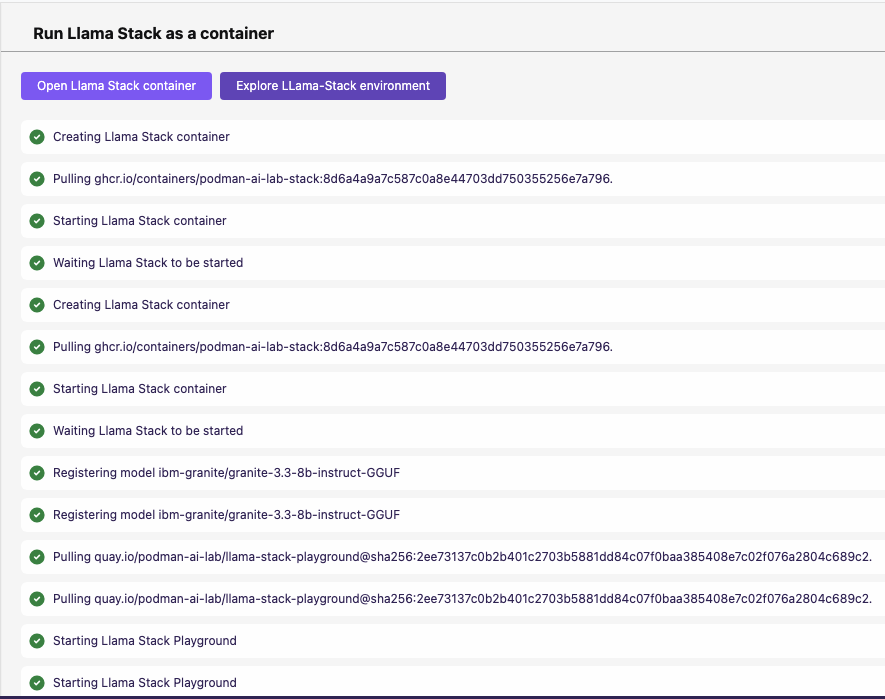

Llama Stack will begin building the container. Once finished, all steps will appear in green.

-

Click Explore Llama-Stack Environment

-

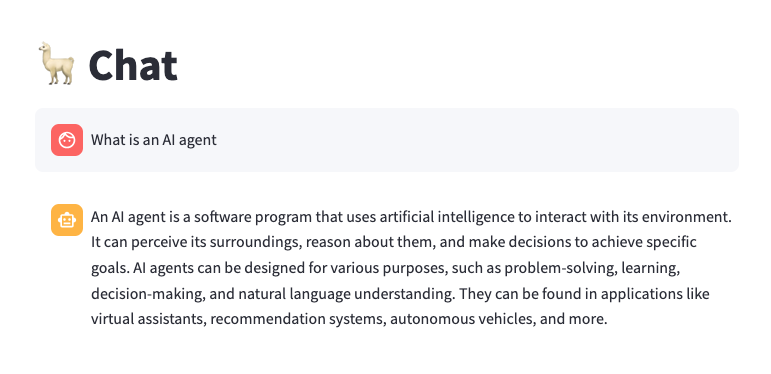

Explore the Llama Stack UI and enter the question, "What is an AI agent" in the chat box:

Use the Podman AI Lab recipe to build a chatbot

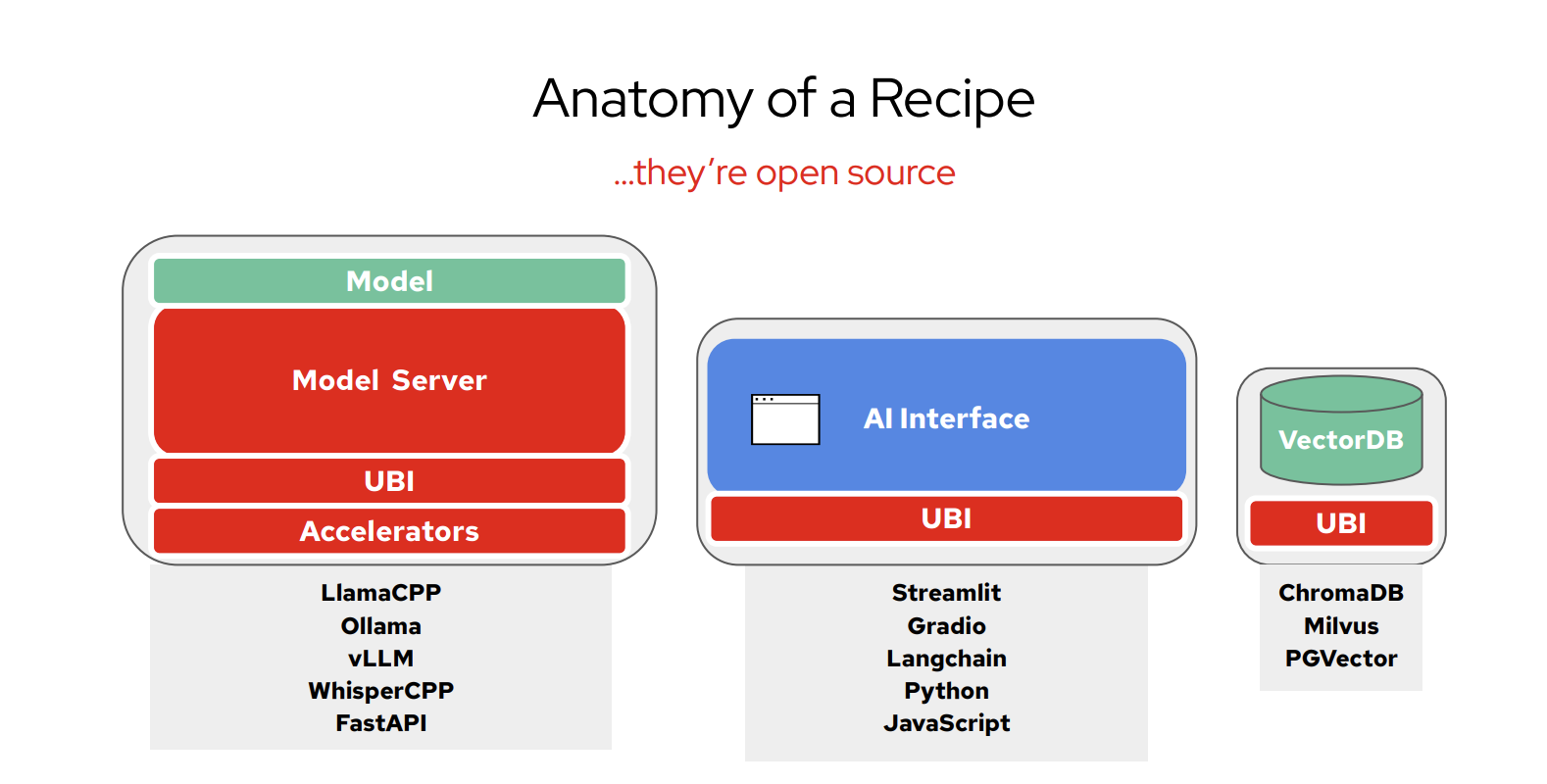

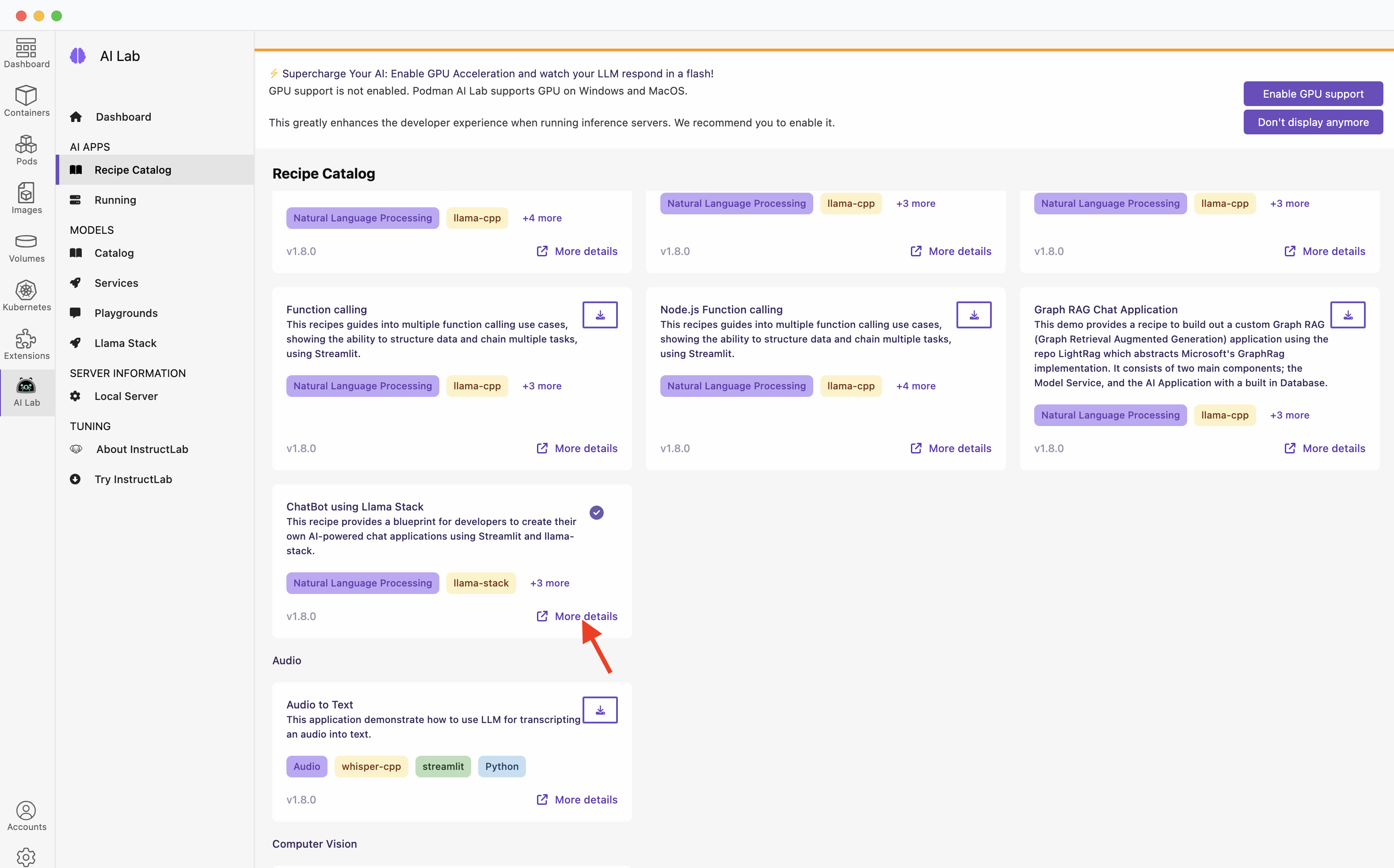

Podman AI Lab provides many recipes you can use as a starting point to build your own applications, explore AI tools, or learn about AI Lab.

-

Click Recipe Catalog.

-

Explore the different recipes available Recipe Catalog and select the ChatBot using Llama Stack by clicking on More Details

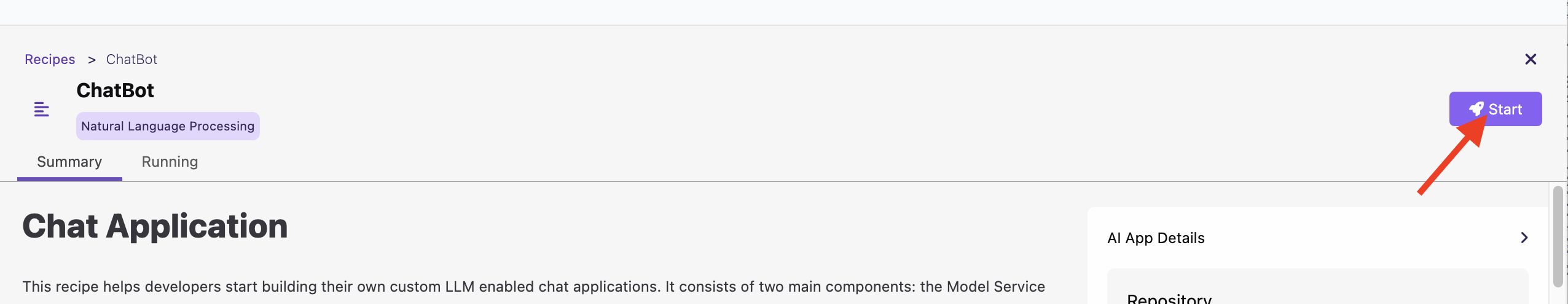

Take time to explore the recipe.

-

Click the start icon.

-

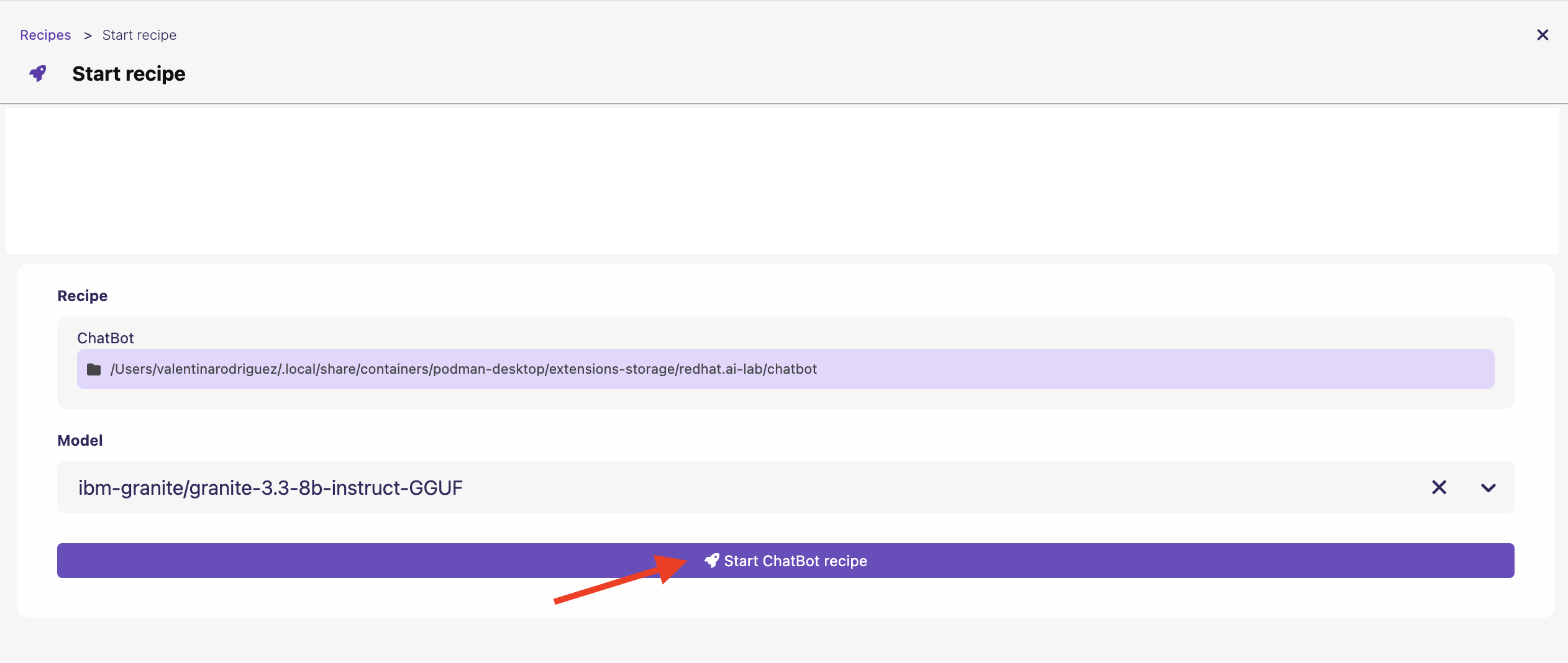

Click Start chatbot recipe to build the chatbot.

-

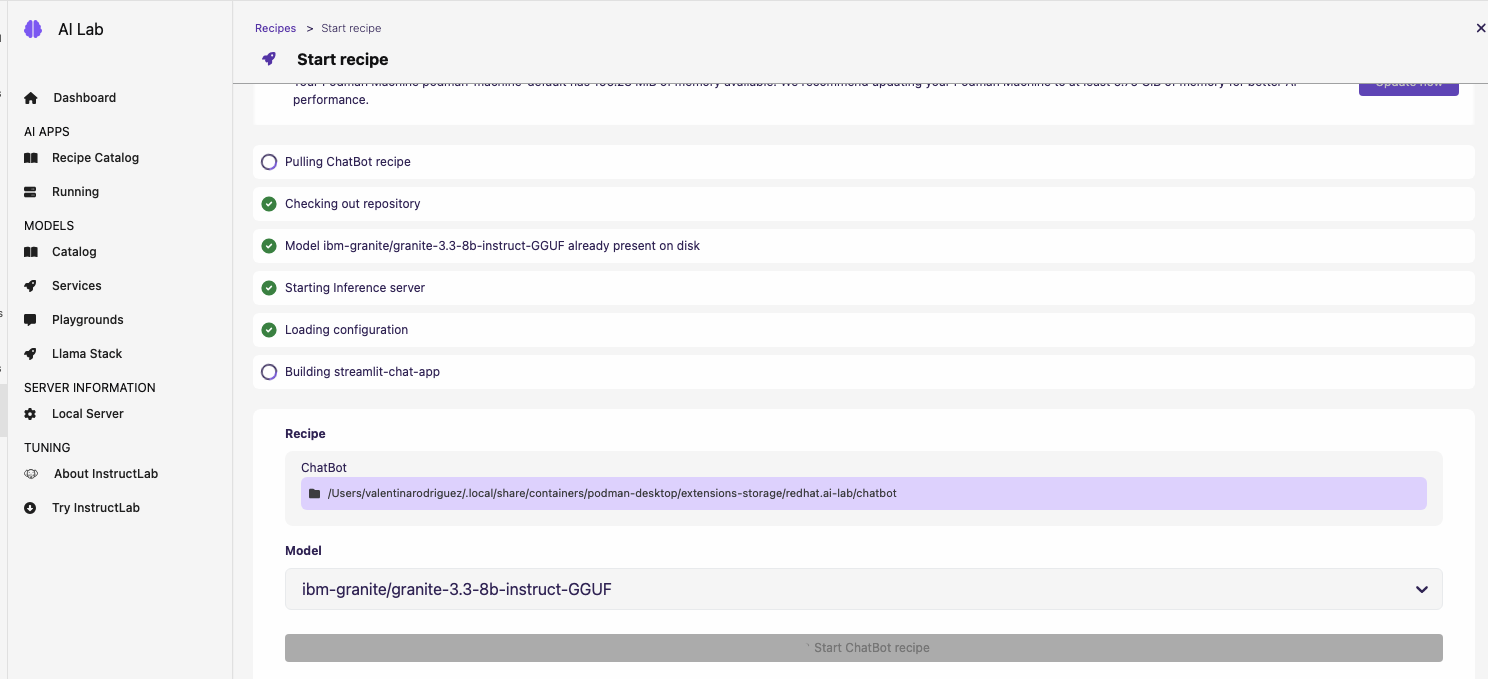

The process will take a few seconds:

-

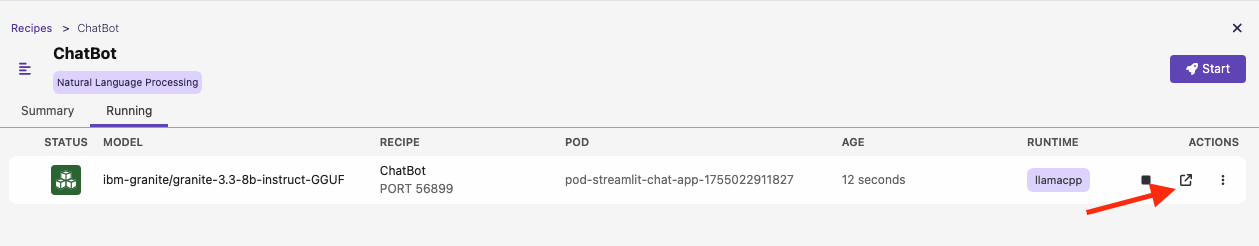

Once the chatbot is ready, Click Open Details

-

To explore the chatbot, click the Open AI App icon.

-

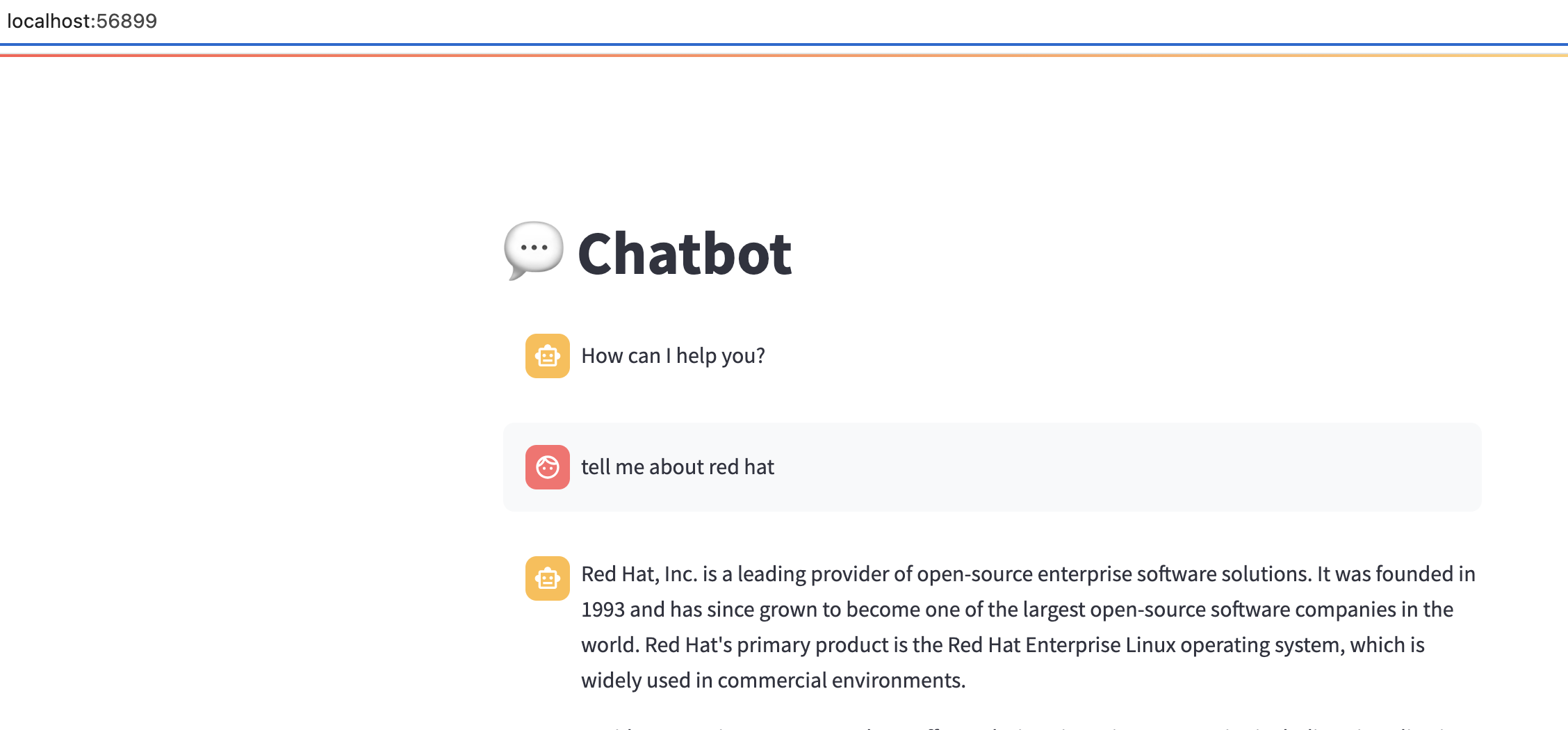

Next, explore and test the chatbot.

-

Congratulations, you have built an AI chatbot integrated with LLama Stack using Podman AI Lab.

-

Next, review the source code.

-

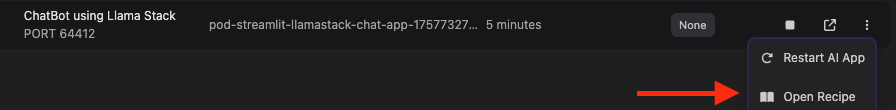

In Podman AI Lab, click AI APPS → Running

-

-

Then, click Open Recipe

-

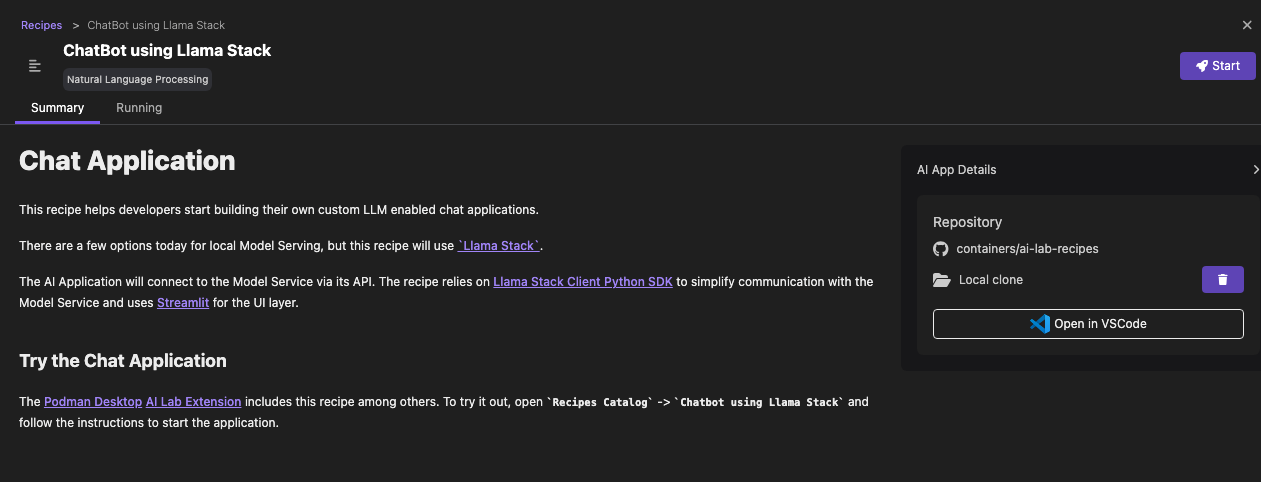

Review the Summary section.

-

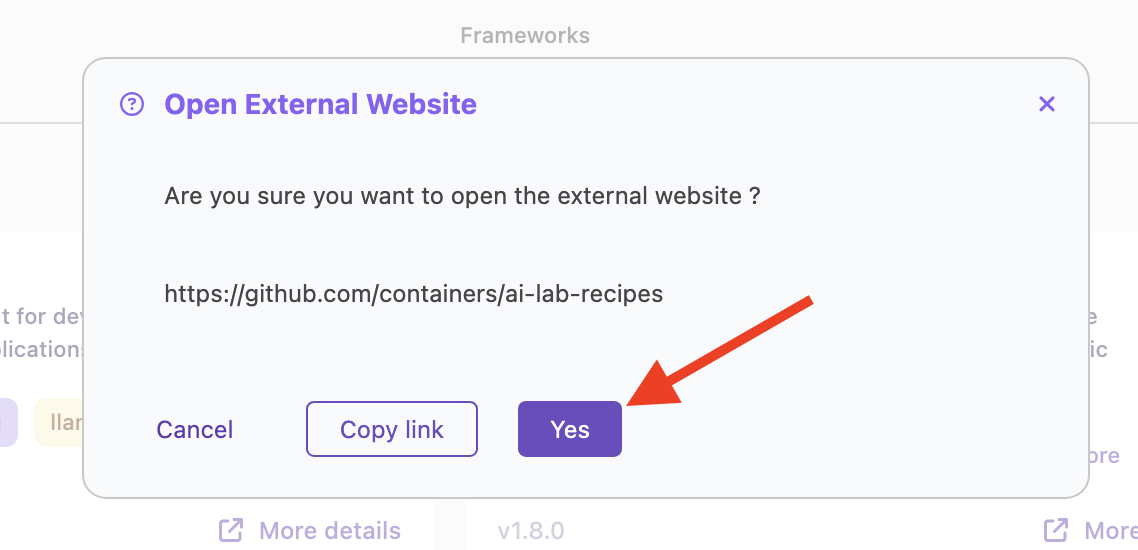

In the Repository section, click containers/ai-lab-recipe

-

Confirm to open external website

-

The GitHub repository includes all the recipes displayed in Podman AI Lab: ai-lab-recipes.

Conclusion

Podman AI Lab is a great resource for experimenting with AI applications, from learning from recipes, testing locally and try different models.